What’s of interest? People who frequently use ChatGPT for writing tasks are accurate and robust detectors of AI-generated text (PDF)

Tell me more!

My quick, non-AI summary is that small groups of humans who use GenAI are the best detectors of AI generating writing. Humans!

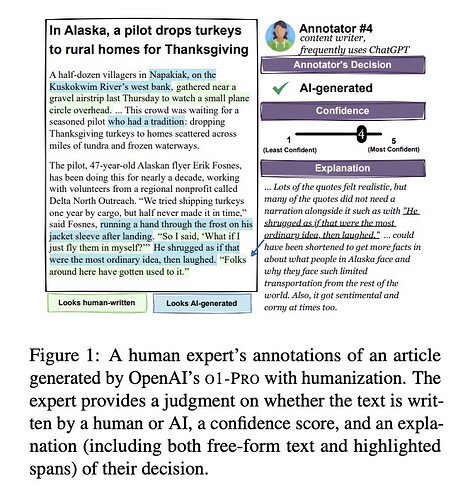

In this paper, we study how well humans can detect text generated by commercial LLMs (GPT-4o, Claude, o1). We hire annotators to read 300 non-fiction English articles, label them as either human-written or AI-generated, and provide paragraph-length explanations for their decisions. Our experiments show that annotators who frequently use LLMs for writing tasks excel at detecting AI-generated text, even without any specialized training or feedback. In fact, the majority vote among five such “expert” annotators misclassifies only 1 of 300 articles, significantly outperforming most commercial and open-source detectors we evaluated even in the presence of evasion tactics like paraphrasing and humanization. Qualitative analysis of the experts’ free-form explanations shows that while they rely heavily on specific lexical clues (‘AI vocabulary’), they also pick up on more complex phenomena within the text (e.g., formality, originality, clarity) that are challenging to assess for automatic detectors. We release our annotated dataset and code to spur future research into both human and automated detection of AI-generated text.

A GitHub repo for the article includes “a dataset of expert annotations of human-written and AI-generated articles. Expert annotations include a decision (Human-written or AI-generated), confidence score, and explanation. We also include the output of many automatic detectors.”

h/t Mignon Fogarty: "This whole article about who can and can't detect…" - zirkus

Where is it?: https://arxiv.org/pdf/2501.15654

This is one among many items I will regularly tag in Pinboard as oegconnect, and automatically post tagged as #OEGConnect to Mastodon. Do you know of something else we should share like this? Just reply below and we will check it out.

Or share it directly to the OEG Connect Sharing Zone