What’s of interest? WebVectors: distributional semantic models online

Tell me more!

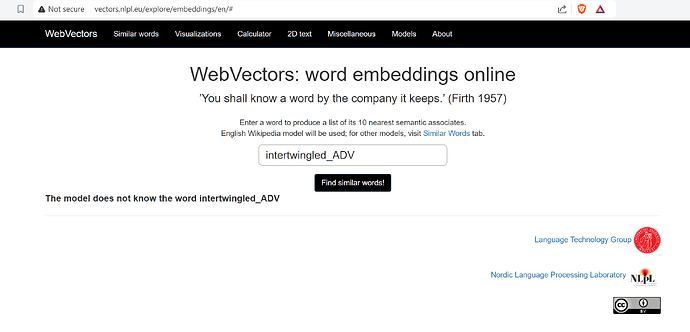

Enter a word to produce a list of its 10 nearest semantic associates.

English Wikipedia model will be used; for other models, visit Similar Words tab.

This service computes semantic relations between words in English and Norwegian. Semantic vectors reflect meaning based on word co-occurrence distribution in the training corpus (huge amounts of raw linguistic data).

In distributional semantics, words are usually represented as vectors in a multi-dimensional space of their contexts. Semantic similarity between two words is then trivially calculated as a cosine similarity between their corresponding vectors; it takes values between -1 and 1. 0 value means the words lack similar contexts, and thus their meanings are unrelated to each other. 1 value means that the words’ contexts are absolutely identical, and thus their meaning is very similar.

Recently, distributional semantics received a substantially growing attention. The main reason for this is a very promising approach of employing artificial neural networks to learn hiqh-quality dense vectors (embeddings), using the so-called predictive models. The most well-known tool in this field now is possibly word2vec, which allows very fast training, compared to previous approaches.

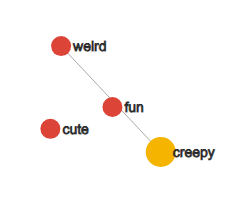

Unfortunately, training and querying neural embedding models for large corpora can be computationally expensive. Thus, we provide ready-made models trained on several corpora, and a convenient web interface to query them. You can also download the models to process them on your own. Moreover, our web service features a bunch of (hopefully) useful visualizations for semantic relations between words. In general, the reason behind WebVectors is to lower the entry threshold for those who want to work in this new and exciting field.

WebVectors is basically a tool to explore relations between words in distributional models. You can think about it as a kind of `semantic calculator’. A user can choose one or several models to work with: currently we provide five models trained on different corpora.

Where is it?: WebVectors: distributional semantic models online

This is one among many items I will regularly tag in Pinboard as oegconnect, and automatically post tagged as #OEGConnect to Mastodon. Do you know of something else we should share like this? Just reply below and we will check it out.

Or share it directly to the OEG Connect Sharing Zone