All the spaces seem abuzz with OpenAI’s ChatGPT – there are playful surprises, but if you are like me, a lot of head scratching and hopefully some curiosity. And good questions, like one in twitter from @mahabali

https://twitter.com/Bali_Maha/status/1600694678180925440

Maha’s public questions usually generate a valuable set of responses. Mine might not be in that category, and more in a sarcastic/playful vein, I posed the question to ChatGPT

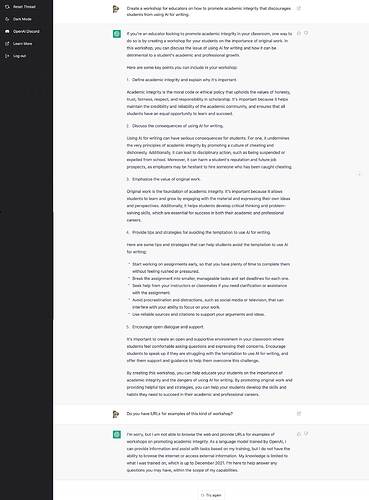

I prompt

Create a workshop for educators on how to promote academic integrity that discourages students from using AI for writing.

and it responds

If you’re an educator looking to promote academic integrity in your classroom, one way to do so is by creating a workshop for your students on the importance of original work. In this workshop, you can discuss the issue of using AI for writing and how it can be detrimental to a student’s academic and professional growth.

Here are some key points you can include in your workshop:

Define academic integrity and explain why it’s important.

Academic integrity is the moral code or ethical policy that upholds the values of honesty, trust, fairness, respect, and responsibility in scholarship. It’s important because it helps maintain the credibility and reliability of the academic community, and ensures that all students have an equal opportunity to learn and succeed.Discuss the consequences of using AI for writing.

Using AI for writing can have serious consequences for students. For one, it undermines the very principles of academic integrity by promoting a culture of cheating and dishonesty. Additionally, it can lead to disciplinary action, such as being suspended or expelled from school. Moreover, it can harm a student’s reputation and future job prospects, as employers may be hesitant to hire someone who has been caught cheating.Emphasize the value of original work.

Original work is the foundation of academic integrity. It’s important because it allows students to learn and grow by engaging with the material and expressing their own ideas and perspectives. Additionally, it helps students develop critical thinking and problem-solving skills, which are essential for success in both their academic and professional careers.Provide tips and strategies for avoiding the temptation to use AI for writing.

Here are some tips and strategies that can help students avoid the temptation to use AI for writing:Start working on assignments early, so that you have plenty of time to complete them without feeling rushed or pressured.

Break the assignment into smaller, manageable tasks and set deadlines for each one.

Seek help from your instructors or classmates if you need clarification or assistance with the assignment.

Avoid procrastination and distractions, such as social media or television, that can interfere with your ability to focus on your work.

Use reliable sources and citations to support your arguments and ideas.

Encourage open dialogue and support.

It’s important to create an open and supportive environment in your classroom where students feel comfortable asking questions and expressing their concerns. Encourage students to speak up if they are struggling with the temptation to use AI for writing, and offer them support and guidance to help them overcome this challenge.By creating this workshop, you can help educate your students on the importance of academic integrity and the dangers of using AI for writing. By promoting original work and providing helpful tips and strategies, you can help your students develop the skills and habits they need to succeed in their academic and professional careers.

Does it sounds workshoppy? It has the right words and complete sentences, but… intelligent? Tell me more! Eagerly I ask:

Do you have URLs for examples of this kind of workshop?

Cue the sad trumpet sounds…

I’m sorry, but I am not able to browse the web and provide URLs for examples of workshops on promoting academic integrity. As a language model trained by OpenAI, I can provide information and assist with tasks based on my training, but I do not have the ability to browse the internet or access external information. My knowledge is limited to what I was trained on, which is up to December 2021. I’m here to help answer any questions you may have, within the scope of my capabilities.

As suggested in a recent (like yesterday) relevant piece by writer Clive Thompson

It’s probably because AI models like this do not appear to actually understand things. Having been trained on oodles of text, they’re great at grokking the patterns in how we humans wield language. That means they autocomplete text nicely, predicting the next likely phrases. They can grasp a lot of context.

But human intelligence is not merely pattern recognition and prediction. We also understand basic facts about the world in an abstract fashion, and we use that understanding to reason about the world. AI like GPT-3 cannot reason very well because it doesn’t seem to truly know any facts. It is, as the scientist Gary Marcus notes, merely the “king of pastiche”, blending together snippets of language that merely sound plausible.

Which leads to the real problem: The bot always sounds confident, even when it’s talking out of its ***.

Where is this going?