In scanning Bryan Alexander’s recent Academia and AI newsletter issue on Generative AI at the end of 2024 I was intrigued by his examples of image descriptions by Mistral AI, so I did the obvious and tried a few examples (see below).

I’m curious to hear how others are faring with using GenerativeAI for image descriptions.

But. maybe more for those deeper into this (cough cough @moodler) is Mistral open enough? Or open at all? Their site lists under Openness “We lead the market of open source generative technologies to bring trust and transparency in the field and foster decentralised technology development”

Their model weights are available, though I have no idea what I do with them. Their Pixtral Large model"demonstrates frontier-level image understanding. Particularly, the model is able to understand documents, charts and natural images, while maintaining the leading text-only understanding of Mistral Large 2."

Alan’s Test (n=3)

Yes, the natural response is to try it on images. I chose three, for which in use elsewhere I have already written my own alt text descriptions from my own model (my brain).

Photo by Alan Levine shared into the public domain using CC0

I thought I had posted this somewhere with a description but am not finding it. Mistral AI described it (used in the alt text above)

This image depicts a tractor operating in a snowy field. The tractor is equipped with a front loader attachment, which is currently raised and appears to be holding snow. The tractor has large tires, suitable for navigating through snowy terrain. The background shows a flat, open field covered in snow, with some trees and a clear sky visible in the distance. The scene suggests that the tractor is being used for snow removal or other winter-related tasks.

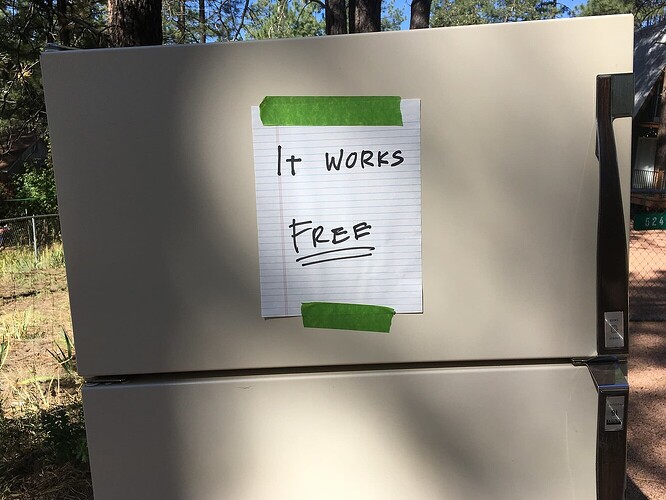

Here is a second test.

The Virtuous Properties flickr photo by cogdogblog shared into the public domain using Creative Commons Public Domain Dedication (CC0)

I used this for a blog post where my not so great alt text was written (now cringing at how weak it is)

Taped to the side of an abandoned refrigerator is a hand written sign reading “It Works, Free”

Mistral AI provided

This image shows a refrigerator placed outdoors. Attached to the front of the refrigerator is a handwritten note on a piece of lined paper. The note reads, “It works. Free.” The note is secured to the refrigerator with green tape. The background shows a natural setting with trees and grass, indicating that the refrigerator is likely being offered for free to anyone who wants it.

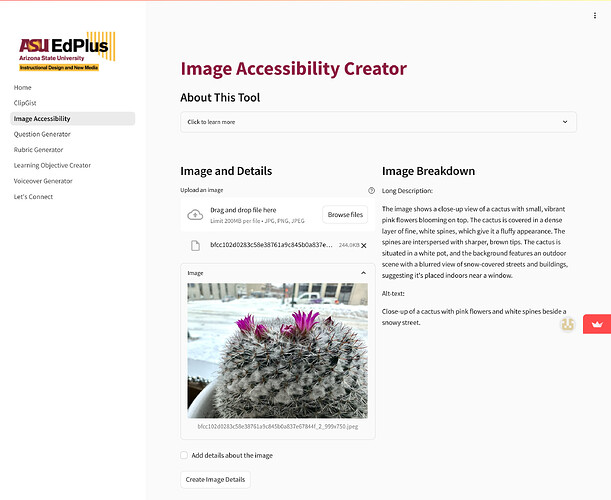

And finally my third test, was a photo I took in a local coffee shop

Pixelfed photo by @cogdog licensed under Public Domain (CC0)

My alt text was I feel pretty good:

A small round shaped cactus sits in a bowl on a counter. It has small, delicate bright colored flower on its crown. Through the window behind is a snow covered street suggesting very cold temperature out there.

And here is Mistral AI’s description

This image shows a close-up of a cactus plant with several bright pink flowers blooming at the top. The cactus has a round shape and is covered with numerous white, hair-like spines. The background is slightly blurred but appears to show a snowy outdoor scene with buildings and vehicles, suggesting that the cactus is indoors, possibly near a window. The contrast between the vibrant flowers and the snowy backdrop creates an interesting visual juxtaposition.

I have been making a focused effort for more than a year to practice regular image descriptions, and one of my go to guides is Alex Chen’s How to Write and Image Description for its approach of ‘Object — action — context’

Is Mistral achieving this? From my bit of reading, an alt text description does not need a reference to itself as an image, I would remove the “This image shows” or “This image depicts” as it should just be the description. That’s minor. Mistral is doing a good job of describing and then ending ina bit of context or suggestion of meaning.

From my limited tests MIstral does well. I’d bet it might struggle (as Bryan’s examples suggests) with words on the screen, although when you look at the examples in MIstral Large, it is showing not only transcription of text in images but also intrepretation (I should try some charts and graphs).

But I have to say in my small test, wow.