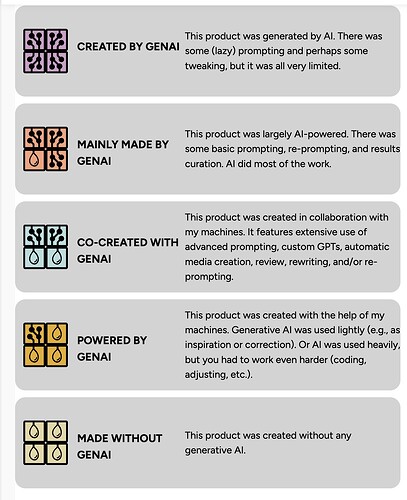

I’ve created a AI Declaration Statement template to go in our Pressbooks front matter, and we are making all of the books we are now publishing include one (if relevant): AI Declaration Statement. After talking with my colleague about these MMM labels, I think I’m going to edit the template to recommend including them as well.

Here’s an example of one that I put together for a project that I’m currently working on:

AID Statement: Artificial Intelligence Tool: Claude Sonnet 4 (Anthropic) used during multiple work sessions between 26 June 2025 - 03 July 2025; Conceptualization: Claude was used to help develop the structure and organization of content revisions, particularly in determining how to address overlapping content across sections; Information Collection: Claude conducted web searches to identify authentic concerns and misconceptions about open education from academic literature and institutional resources; Writing—Review & Editing: Claude provided extensive revision and editing support to enhance content depth, improve consistency of structure, maintain a supportive educational tone, and refine explanations to better serve the faculty audience.

Human Author Role: The human author (project creator) retained full creative control and decision-making authority throughout the process, providing all original content strategy, institutional context, pedagogical approach, and final approval of all changes. The human author determined the overall vision, selected which AI suggestions to implement, and made all substantive decisions about content direction and tone.

I haven’t decided yet whether to include the “Human Author Role” statement or not. Is it necessary? What value does it add / role does it play? My name is on the work, so of course I’m ultimately responsible for the output. I asked Claude about it, and here’s its response:

Prompt (as a part of a longer conversation): Is adding “The human authors retained full creative control and decision-making authority throughout the process” necessary? What does including that add to the statement?

Response: Looking at the AID Framework examples, they don’t include statements about human creative control. The framework appears focused on factual disclosure of AI use rather than defensive assertions about human authority.

Adding “The human authors retained full creative control…” would:

Potentially detract by:

- Making the statement unnecessarily long

- Sounding defensive, as if AI use needs justification

- Adding language not modeled in the framework examples

- Implying that AI use somehow threatens authorial control

Doesn’t add value because:

- It’s already implied that you made decisions about what suggestions to accept/reject

- The framework assumes proper disclosure is sufficient

- Readers understand humans remain responsible for their work

The AID Framework seems designed to normalize AI use through transparent disclosure rather than requiring justification. The clean, factual approach of just stating what tools were used for what purposes is more aligned with the framework’s intent and creates a more confident, professional tone.

I’d recommend sticking with the straightforward disclosure without the additional statement.

Sorry for going off on a bit of a tangent!