Maybe for a change from all the conversation about ChatGPT take a look at an interesting development in the space of AI Generated imagery (picking up on a topic of interest here for a while).

Might it be possible to attribute source images used to create new ones with the Stable Diffusion generator? That is the premise of Stable Attribution

When an A.I. model is trained to create images from text, it uses a huge dataset of images and their corresponding captions. The model is trained by showing it the captions, and having it try to recreate the images associated with each one, as closely as possible.

The model learns both general concepts present in millions of images, like what humans look like, as well as more specific details like textures, environments, poses and compositions which are more uniquely identifiable.

Version 1 of Stable Attribution’s algorithm decodes an image generated by an A.I. model into the most similar examples from the data that the model was trained with. Usually, the image the model creates doesn’t exist in its training data - it’s new - but because of the training process, the most influential images are the most visually similar ones, especially in the details.

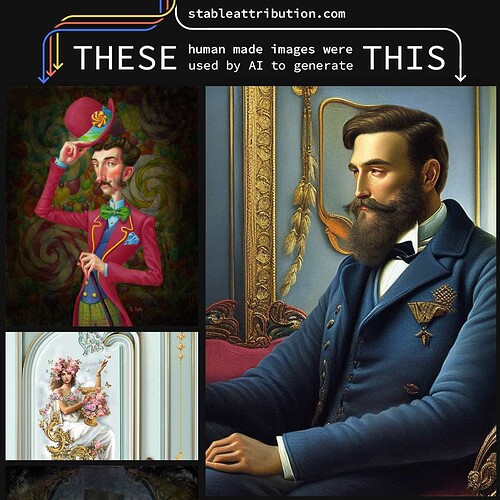

You can explore a collection of examples to see what Stable Attribution suggests is the images training source – see one example I randomly explored (note the download is just a screen shot showing the first 2 source images out of 15 identified)

Downloaded “attribution” for full results of Stable Diffusion sourcing for an AI generated image

It’s interesting and one can see elements of some of the source images, but I cannot say for sure I understand how Stable Diffusion used them to create the final image. It’s not just mixing pixels.

And there is some speculation that its not identifying the source images but ones that are similar in the training dataset

https://twitter.com/matdryhurst/status/1622416416996577280

What’s interesting is that Stable Diffusion is built upon the LAION Open dataset which is clearly defined as public, but the license gets fuzzy to me:

We distribute the metadata dataset (the parquet files) under the most open Creative Common CC-BY 4.0 license, which poses no particular restriction. The images are under their copyright.

At least Stable Attribution offers a means to identify sources of images in the training data set (I am not sure where it goes)

Is this attribution or is it not? Do we really know how these images are created from sources in the data set? It remains a black box we have to guess at.

And in the spirit of attribution thanks to Alec Tarkowski for sharing this in Mastodon