After an OEG Voices podcast recording session today with @poritzj I am refraining from using the AI phrase. Jonathan makes a strong case that what we are really talking about has nothing to do with intelligence, more properly called “large statistical models” Stay tuned for that episode to be out soon at https://voices.oeglobal.org/

The Girl in the Yellow Hat

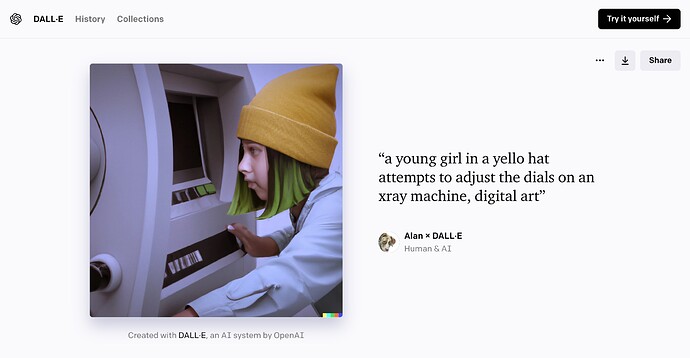

I am talking about these kinds of images (screenshot) I generated using DALL-E (it’s now open to anyone to use) that even managed to not get tripped up in my typo

“How does this machine work?” she wonders. “And what can I do with what it spits out? Who does it belong to?”

Lifting some from what I posted in the Creative Commons cc-edu Slack channel…

Beyond key issues of unclear bounds concepts of reuse go into the training set, and the muddy waters of copyright, I’d been thinking more practically as to what happens as content creators start to use imagery (and others to come).

What/how can they be licensed? And how the heck can they be attributed? I was talking about this in the office hours today with @nate who shared an example of how Creative Commons is starting to attribute such images

I commented that we have almost none of the TASL elements (from CC Attribution Best Practices- Title, Author, Source, License) to use.

But then I happened to look at my DALL-E account and noticed there is now a publish button, that makes the wok public (we now have the S) — see https://labs.openai.com/s/YDB15XNB5C9WytXJz9F3FSJW and they co-label me and DALL-E (“Human and AI”) as authors.

Their terms of use indicate (section 2.) DALL-E images can be used for any purpose (commercial too) but also (section 6) they assert ownership of the image. I own my prompt.

Who can explain all this to an educator or student now? What happens when they create such images and want to use in open content?

Tools Do Not get Credit, Who Does?

@poritzj replied in that Slack discussion (and discussed more in today’s podcast session:

Note that the CC AI WG wrote a bit about this whole topic…We essentially said that one should always think of the phrase “AI” as fancy, (albeit nonsensical,) PR-speak for “large statistical model” or, more succinctly, “tool.”

So giving you and the tool credit (as you said “Human and AI”) would be like giving copyright ownership for a work to “Ansel Adams and Nikon camera” or “Normal Rockwell and His Favorite Paintbrush”: pretty silly.

Yes, that makes sense. I do many of my graphics in PhotoShop but never give my tool credit.

But…

At the same time, how much credit can I, the human take? I did not do much beyond typing words in a box. I hardly feel like I created anything, if anything, I might iterated until the machine spits out something I like. How much agency have I exerted?

And however we characterize these tool, I am able to do make images I cannot quite as easily find. Going back to the girl in the yellow hat, Google Images (regardless of rights settings) gives me nothing close and even reducing keywords to girl yellow hat adjusts machine is nowhere close.

Likewise Openverse yields de nada on the full prompt again, too many keywords, even reducing to girl yellow hat leaves me empty photo hande.

And so it gets murky. Newer mobile phone cameras and web tool make it easy to take a dull or poorly lit photo and apply effects that before would have taken my manipulation in software. Whether it is artificial, intelligent, or just a statistical model, it’s pretty blurry who can take ownership.

More so how to we credit / attribute such media? Who gets credit? Are we on some new frontier of a wild wild image west?

A New or Just Unknown Frontier of Attribution?

Following the Creative Commons example Nate shared with me, but adding now links to the source image and the OpenAI terms of use, is it something like:

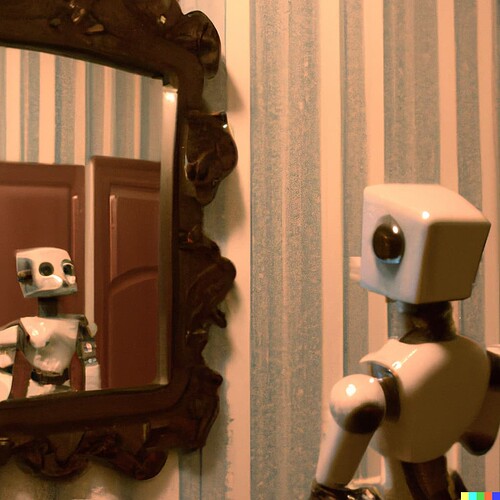

"Robot Wild West” by Alan Levine, image generated and regenerated by the DALL-E 2 AI platform with the text prompt “A robot sitting on a horse on a western plain Impressionist style.” OpenAI asserts ownership of DALL-E generated images; Alan dedicates any rights it holds to the image to the public domain via CC0.

That’s quite a mouthful.

Is anyone else curious about this stuff? Are you seeing people making use of these images? Where do we go from here?