Artificial Intelligence, can we understand it without being data scientists? Is it too complex to grapple? Do we just have to trust the experts, like the ones who claim sentience?

No. I hope not.

But I do know I can better come to my own understanding if I can do more than read papers and blog posts, but actually dabble in a technology. I am somewhat propelled by a fabulous discussion started by @wernerio for the OEGlobal “Unconference”:

I want to connect… by doing AI.

Are You Seeking AI? flickr photo by cogdogblog shared into the public domain using Creative Commons Public Domain Dedication (CC0)

I started by the familiar signing up for a MOOC, AI for Everyone taught by that guy who co-launched Coursera. As usual I watched a few videos and then never went back.

One AI resource I might explore again is Hugging Face calling itself open source. A few tears back I used it in to show students how to create a bot that completes sentences based on their twitter history as the text source, see (my demo) or as an example

While I cannot make too much out of their open source code, I prefer to try to dabble myself to better understand the limits and potentials. I was interested in their free course in NLP though I see it requires strong python skills.

Also something I spotted worth exploring:

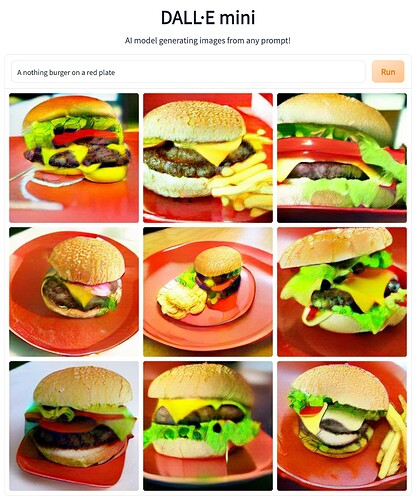

And I find these types of playful sites interesting

I don’t know where I plan to go with digging into AI, I want to get a better sense of what I can do hands on.

Anyone want to explore too?

Note: This is one example of an Open Pedagogy Adventure topic - you can start your own here, just make a new topic, post, share, do something, update, repeat!