Elsewhere in OEG Connect I have been posting notes of trying out various new Artificial Intelligence tools.

This all started with one of many valuable critical perspectives on Artificial Intelligence by Emily Bender, specifically here on whether AI can do literature review (the thread is well worth it as well as her post on AI Hype Takedopwns)

https://twitter.com/emilymbender/status/1518428712781238273

She refers to a new venture, Elicit identified as an “AI Research Assistant” - if I understand correctly it provides GPT-3 powered AI to help frame research questions

queries using the APIs for Semantic Scholar.

I decided to try it out so created an account. Besides the description of Elicit on its main web site, the email responding to my account creation contained a bit more:

The main workflow in Elicit is literature review, which you see on the home page. This workflow tries to answer your research question with information from published papers.

Elicit currently works best for answering questions with empirical research (e.g. randomized controlled trials). These tend to be questions in biomedicine, social science, and economics. Questions like “What are the effects of ____ on ____?” tend to do well.

and also incliuded a link to an explainer video. I am more the type to jump in and try and return to watch the video later.

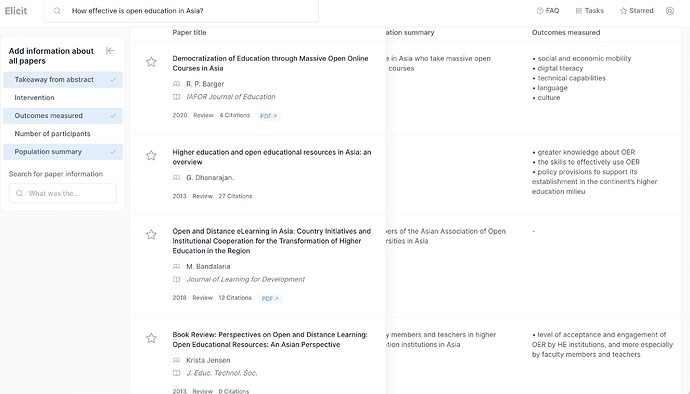

Also, my discoplaimer, is that I am not regularly a researcher, I just like to find and learn about things. Thinking of topics of OEGlobal interest, scope, i experimented broadly with “How effective is open education in Asia?” and clicked to view the outcomes column

Again, not being a researcher on this topic, I cannot judge the results.

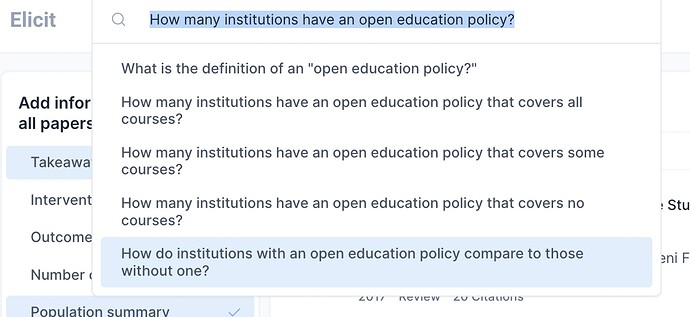

For a second try I entered “How many institutions have an open education policy?” and where I think it might help is how it suggests different search queries

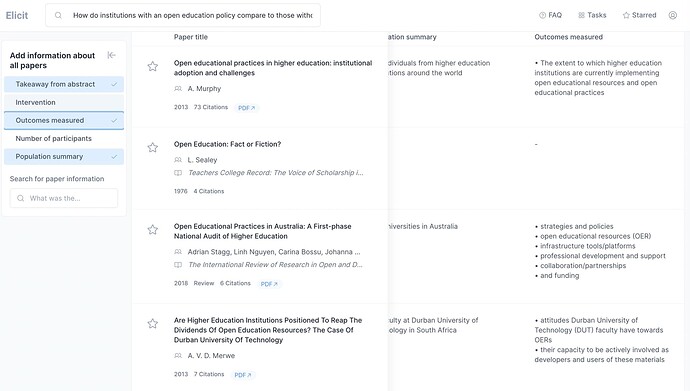

The last one looked most interesting “How do institutions with an open education policy compare to those without one?”

Again, it’s outside my expertise to say how valid or invalid the AI is here-- I would love to hear more from the practicing researchers here if it is useful or not.

At least it’s a break for generating weird images of robots writing at a desk ![]()

Is anyone willing to give Elicit a try and report back here?

’